Data Node Workload

Overview

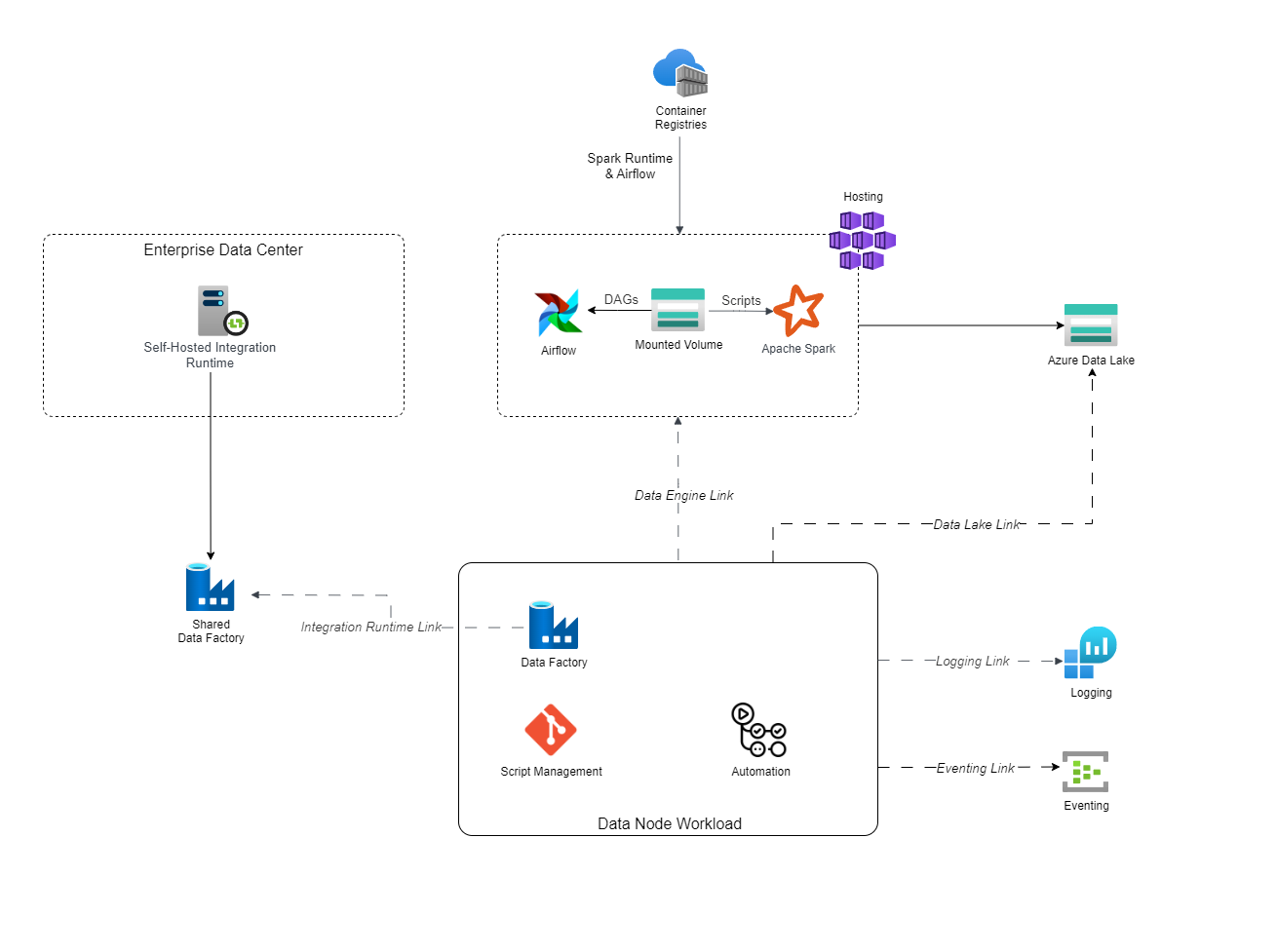

A Data Node workload represents the primary way business systems are integrated into the Scaled Sense platform, thus making the data available to be leveraged by other services. This workload provides a well-defined approach to ensuring data consistency, quality, and governance across the organization.

Systems may be directly incorporated into this service using Azure Data Factory[1] where the integration is straightforward and connectors exist. For more complex business logic, custom integrations or other third-party tools may be used with its output data being targeted to reside within this service. From there, this workload takes over the sometimes tedious process of preparing the data for use by other services.

Architecture

Capabilities

Add-ons

SQL Copy Integration

The SQL Copy Integration Add-on configures an Azure Data Factory pipeline and other supporting Azure resources to enable the sourcing of data from a SQL database. Data is pulled from the configured database and loaded into the linked Azure Data Lake for further ingestion and processing.

To use this add-on to access a SQL Server located on-premises or without a public endpoint, an Integration Runtime Link is required to establish a connection to the target server.

Transient File Copy Integration

The Transient File Copy Integration configures an Azure Data Factory pipeline and other supporting Azure resources to enable the sourcing of data from a file drop location. An Azure Storage Account is provisioned with containers for each configured Data Object. Files placed in the respective drop folders are loaded into the linked Azure Data Lake and further ingestion and processing.

This add-on supports the load of both Excel and delimited files, based on the configuration of the add-on and Data Objects in the workload.

Airflow

The Airflow Add-on deploys the components of an Apache Airflow installation to the linked Hosting workload (via the Data Engine Link). This add-on is intended for use when the requirements for an integration exceed the capabilities of the other provided "no code" integration options. Airflow is a tool that has demonstrated capability to perform a variety of tasks using a "low code" approach with Python. Workflows authored in Airflow, also known as DAGs, can integrate with APIs and other data sources to fetch data. This data can then be ingested into the Data Lake for processing and modeling.

Diagnostic Logging

The Diagnostic Logging Add-on is automatically configured upon adding an Logging Link. This add-on associates the included Application Insights[2] instance with the linked Log Analytics[3] workspace. In addition, diagnostic logging will be enabled for cloud resources included with the workload and transmitted to the same linked workspace.

Links

Data Management Link

A Data Management Link associates a Data Node with the Product's Data Lake. By associating to the Data Lake, the integrations and credentials from this workload are granted access to load and transform data.

Data Engine Link

A Data Engine Link configures the resources necessary to execute the Service's data pipelines. The current data engine utilizes Spark on Kubernetes. After successful configuration of the link, data scripts that are pushed to the associated repository for the service will be utilized by the data engine in the processing and running of data pipelines.

Integration Runtime Link

An Integration Runtime Link can be configured when the Data Factory resource requires a connection to a non-public data source, such as an on-premises SQL server. This link adds a linked self-hosted integration runtime[4] that has been provisioned in a Shared Data Factory to this Service's Data Factory.

Eventing Link

An Eventing Link allows integrations within this service to send and receive events from the linked event broker. This capability allows for the near real-time processing of data from sources and through transformation processes.

Logging Link

A Logging Link collects the necessary information from the linked Log Analytics workspace and triggers the provisioning of the Diagnostic Logging Add-on.

Properties

Source System Name

Network-based Firewall

Data Processes

Additional Broker Consumer Topics

Transient File Copy Integration

Airflow Connection Secrets

Airflow Broker Event Mapping

Use Cases

- Replicating data from an on-premises SQL server and enabling the use of the data throughout the Scaled Sense Platform.

- Integrating with a third-party API to further enrich organizational data.

- Creating a manual file drop process for ingesting user-generated information.

References

- [1] https://learn.microsoft.com/en-us/azure/data-factory

- [2] https://learn.microsoft.com/en-us/azure/azure-monitor/app/app-insights-overview

- [3] https://learn.microsoft.com/en-us/azure/azure-monitor/logs/log-analytics-overview

- [4] https://learn.microsoft.com/en-us/azure/data-factory/create-self-hosted-integration-runtime?tabs=data-factory